The Historical Parallels: Preliminary Reflection

Despite the unique features of advanced AI systems, a thorough study of historical examples from emergent technologies offers insight into navigation between the risks and benefits.

The rapid advances in the AI development have left the field mostly devoid of large-scale studies of the historical and sociological lessons from earlier similar developments. While AI - both in potential benefits and risks - is in some forms unique, it shares similarities with earlier major technological breakthroughs. While some of these have been studied intensely,1 there remains work to do in building a coherent overview of the shifting dynamics new technologies cause.

A new technological breakthrough is a disruption in the fabric of society, originally locally, inevitably later globally. The discussion around AI safety is dominated by professionals of technological perspective. A good researcher, though, might not be a good strategist,2 and dismissing the historical lessons is a blind spot we might not afford.

Studying the transitional periods of major technological breakthroughs and their effect on societal dynamics will arguably reveal recognizable patterns. Recognizing and understanding these patterns inevitably makes us better prepared for good governance solutions for the age of artificial intelligence.

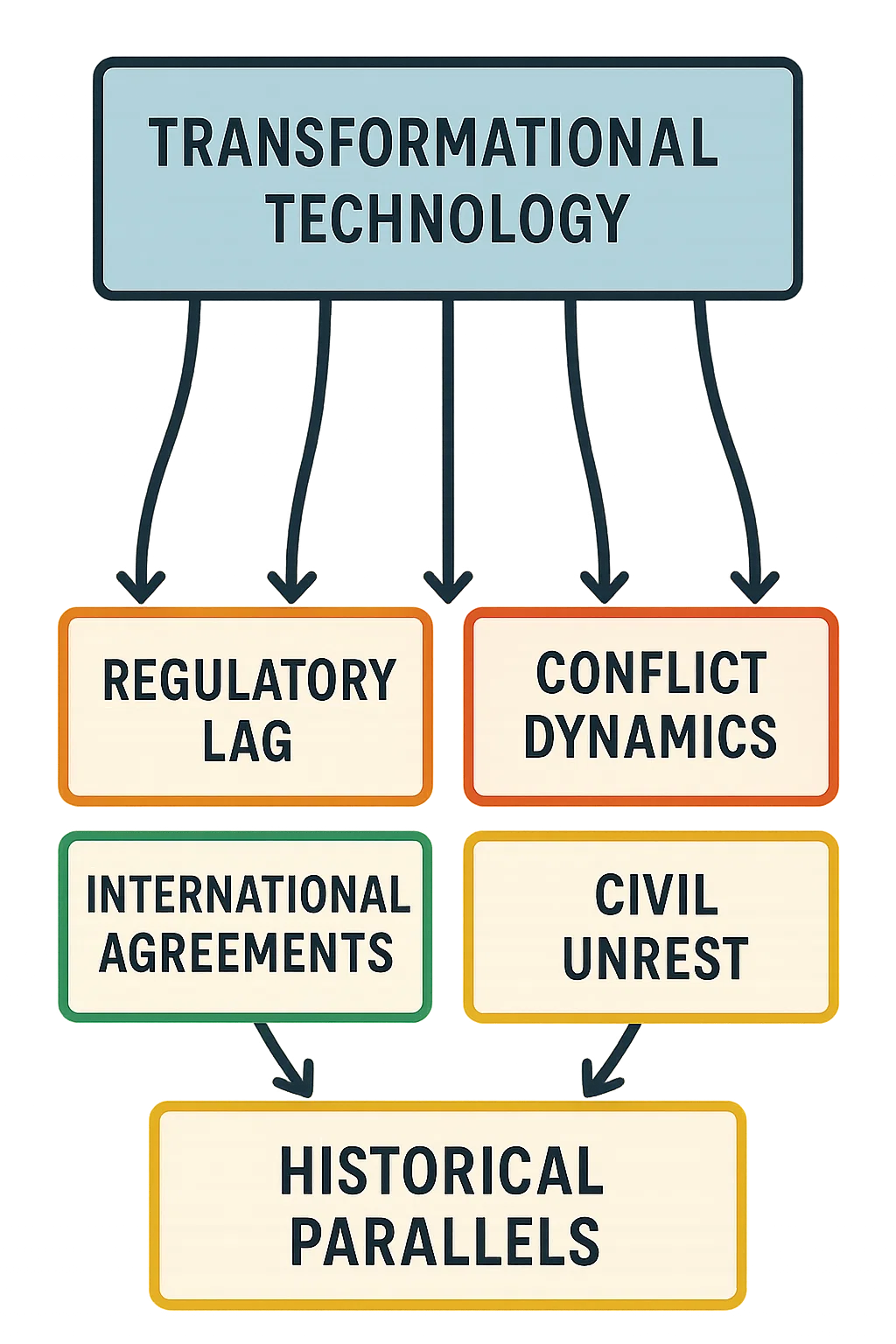

I present in this post a research agenda, split into four separate themes of reactive patterns. This text is a map of the territory rather than exploration of each area in depth, providing a backbone for further research. Each of these themes warrants a study on its own. The historical cases of each theme represented should be studied together to form an understanding of the high-level patterns, not as isolated historical cases.

The themes are:3

Regulatory lag and warning shots

Conflict dynamics and emergent technology

Technological disruption’s effect on civil society

International agreements on technological restrictions

1. Regulatory lag and warning shots

Warning shots in the context of technology are situations where a (new) technology creates disvalue through some event(s), but less than it has potential to create. Thus the event moves the Overton window, sparks public discussion and gets policy-makers on the move. While we may not get a warning shot with catastrophically dangerous AI, it is potentially highly important to understand the dynamics and timelines of any new technology, regulatory lag and inevitable warning shots.

With each new technology, there is usually the initial period of low regulation. This period can sometimes continue for a long time, despite the concerned voices: as long as nothing bad happens, regulators tend to be reluctant to regulate anything that has commercial value.

Crafting a thorough overview of the history of warning shots provides us with a clearer picture about the balance between overregulating and underregulating from the perspective of created disvalue. This historical study would need to include a wide collection of cases as an overall study. Using the data from these cases, we can create a framework of the pattern identification of recognizing and responding to technological risks:

Emergence of a new technology (in public use)

Initial dismissal of warnings

Catalyzing event(s) that change public perception, i.e. the warning shot(s)

Institutional adaptation and governance

New equilibrium

Monitoring

Industrial pollution from 19th and 20th centuries is a prime example of both regulatory lag and warning shots, and well documented. Below are some other especially worthwhile examples representing the different perspectives discussed in this document.

Chemical weapons. Despite consciously creating chemical weapons for the use of warfare, the major powers were horrified by the first large-scale use of chemical weapons in the First World War. Starting from Ypres in 1915, these weapons killed approximately a hundred thousand people and permanently injured from 200 000 to 300 000. These were instrumental to the creation of Geneva Protocol, but a huge price was paid before the ban.

Ozone depletion. The so-called ozone hole was discovered in the 1980’s, and it led to the Montreal Protocol in 1987, banning CFC’s. This is a good example of how catastrophic damage can be mitigated before the worst scenarios happen.

Thalidomide crisis. The birth defects in the 1950’s and 1960’s caused by this insufficiently tested drug led to fundamental reforms in pharmaceutical testing and approval processes. The insufficiently tested drug resulted in approximately 10,000 to 12,000 children born with severe birth defects worldwide between 1957 and 1962.

USS Eastland Disaster. After maritime regulations required more lifeboats following the Titanic disaster, ships became top-heavy, causing the USS Eastland to capsize and kill 844 people in 1915. This is an example of how well-intentioned regulations can create unforeseen risks if technological systems aren't considered holistically.

These examples show how technological developments across different domains have produced warning events that revealed unforeseen dangers, often leading to governance responses—though typically after harm had already occurred.

2. Conflict dynamics and emergent technology

The natural state of human societies is competition. This is equally true between different forms of collectives, including tribes, kingdoms, nation-states, corporations and military alliances, for example. Warfare has been a key propagator of technological advancements throughout the history of human kind. While our civilization has somewhat pivoted during the 20th and 21st centuries, the conflicts emerging from competition is still our key element.

New technological breakthroughs alter the competition balance, forcing actors to react in various ways. This framing is closely related to Power Transition Theory,4 which offers a framework for understanding how shifts in relative power between states—often accelerated by technological change—can lead to conflict.

Studying the earlier examples of new, powerful technologies and their effect on the status quo between (great) powers is a very clear parallel to the current situation with AI development. Understanding the consequences of different responses to new technologies can help us crafting implementable AI governance.

Many of the more recent case examples are studied somewhat thoroughly: in addition to the nuclear weapons, the transition in naval warfare in early 20th century, strategic bombing capacities in World War II, and railroad-telegraph- combination are quite well understood. Among the less focused pattern-recognizion case studies I suggest as viable are:

The ocean vessels and their effect on the internal dynamics of superpowers. Spain and Portugal were the first countries to build caravels and carracks, and thus were able to expand their resource pool vastly wider than their European competitors. This led to the relative lead position of these two countries from Iberian peninsula, and the whole 16th century is their hegemony. Importantly, it is also a century of constant warfare: most of the wealth accumulated by their new tools was used towards larger armies and constant warfare against their enemies.

Russia and the loss of first World War. It is widely argued that one of the leading reasons for Russian’s poor performance in the Great War was their underdeveloped industrialization. Russia acted like a superpower without the means to do so, which led to catastrophic war efforts and eventually to revolution and civil war. Similar situation could arguable arise with modern great powers that have fallen or will fall behind in the AI competition.

Ottomans and the erosion of their power position. Ottomans were the leading superpower of Mediterranean area and Middle-East for centuries, but they never built ocean vessel and they industrialized late. They sided with the Central Powers in the Great War, and were crushed and divided. This eventually led to the fragmentation of Middle-East and the Balkan Peninsula, creating numerous civil wars. Their example offers modern nation-states an example of the importance of keeping up with development, but also for the researchers the price of failed states. AI might similarly lead to failed states, and especially with nuclear countries, we should strongly aim to avoid situations such as Russia and Ottomans a hundred years ago. What would have happened should they have had nuclear weapons?

The history of conflict dynamics gets help from the large existing literature, especially from the military perspective. Notable example is Robert Jarvis’s offense-defense balance theory, which suggests that when new technologies favor offensive capabilities, security dilemmas intensify and arms races become more likely. The Superintelligence Strategy from March 2025 (Hendrycks, Schmidt and Wang), which introduced MAIM, further develops this argument in the AI context.5

In AI development, the potential for rapid, covert capability advancement creates a similar dynamic where defensive measures lag behind offensive applications, potentially accelerating competition among major powers. Good historical examples of offense-defense balance that should be studied together include:

Chariot warfare. The introduction of horse-drawn war chariots transformed battlefield dynamics across the ancient Near East and Egypt. Initially providing overwhelming advantages to early adopters, technological diffusion eventually led to new military doctrines and defensive countermeasures. This parallels leading AI developer’s potential to create temporary but significant advantages.

Greek Fire. The Byzantine Empire's secret incendiary weapon provided naval supremacy for centuries. Its closely guarded formula demonstrates early information security and technological secrecy as strategic advantages.

Gunpowder revolution. The gradual spread of gunpowder weapons from China to Europe transformed warfare, castle design, and eventually the structure of states themselves as effective mass armies could be trained quickly and cheaply. The development shows how a fundamental technology can reshape power balances and render traditional defenses obsolete.

3. Technological disruption’s effect on civil society

Advanced AI systems affect the fabric of civil society, both imminently and over long-term. Major disruptions of the social contract6 - especially if they are fast, and/or created by a single group of actors - cause civil unrest. The important research questions of this perspective can be divided into two:

What is the “tipping point" where large-scale rioting and revolutions begin?

What kind of negative long-term societal externalities technological advancements have had?

The negative impacts of the current trajectory of AI developments have been discussed and studied, but not thoroughly in their historical context. Elements such as job loss, deep feelings of lack of control and technological pessimism have all historical parallels, and have been met with various reactions.

Adjacent to both of these problems is the so-called distribution problem, where the rewards and prosperity created by the new technology are distributed (extremely) unequally, thus boosting the economic polarization within society. This leads to further unrest. Early industrial age offers a lot of these examples, of which the most famous one is the textile industry, where skilled workers were quickly replaced. The digitalization of our societies is another similar moment.

Among the case studies worthy of incorporating in the study for the first sub-question are:

Luddite movement. The mechanization of textile production in early 19th century England triggered organized resistance from skilled artisans who saw their livelihoods threatened. Luddites were responding to the destruction of craft traditions and economic autonomy without compensatory social protections. The British government's harsh military response (executing many Luddites) shows how technological transitions backed by state power can suppress rather than address legitimate grievances.

European corn riots and food automation. The mechanization of agriculture and emergence of market-based food distribution triggered widespread food riots across Europe. When traditional moral economies (with expectations of fair prices during shortages) were replaced by profit-maximizing systems, communities felt betrayed by violations of the social contract. These riots often succeeded in forcing temporary price controls, demonstrating how technological efficiency alone cannot override deep social expectations.

United States railroad strikes of 1877. When railroad companies slashed wages during economic downturn while maintaining shareholder dividends, workers organized the first nationwide strike in American history. The violent suppression by federal troops marked a clear tipping point: technological systems considered too vital for national functioning will be protected by state violence if necessary, regardless of distributional concerns. This specific example is nervously parallel to one of the possible AI trajectories.

And for the second one:

Social media and algorithmic curation. The unplanned, large-scale deployment of engagement-optimizing algorithms since 2004 has created profound social fragmentation, addictive behavior and vulnerability to manipulation. The lack of preemptive governance frameworks allowed business models to become entrenched before harms were fully understood.

Mass automobile adoption (1920s-1960s). The reshaping of urban and suburban landscapes around automobile infrastructure fundamentally altered community structures, often destroying existing neighborhoods. This shows how technologies that increase individual convenience can undermine social cohesion when deployed without consideration of their wider impacts.

This historical perspective suggests that AI governance should address not just technical safety but the maintenance of meaningful social contracts during periods of disruption. The pattern of technology-driven social fragmentation followed by belated regulatory responses has consistently produced avoidable harm that better anticipatory governance might prevent. This is the key of any successful AI governance plan, as AI has some extremely serious risks involved.

4. International agreements on technological restrictions

Regardless of where the most powerful AI systems will be developed, they will affect us globally. Thus the most effective - and perhaps the only effective ones - solutions must be international by nature.

The history of international agreements is relatively new, as until the Great War war was thought as a natural continuation of diplomacy and a common part of the interaction between states. Any meaningful concept of “international” also was not possible before the early stages of globalization, which would mean something between 1500 and 1800. It was during the Great War that the consensus moved towards avoidance of wars and led to the creation of the first global international institution, the League of Nations, which failed miserably.

While international agreements are nowadays a relatively common phenomena, their effectiveness on the most pressing problems is lacking. Despite institutions such as UN being net positive in their existence, they have not been able to solve or avoid completely conflicts of different scales, or prevent environmental harm. The numerous NGO’s have countless professionals crafting analysis and expert opinions, but they are not robustly transferred into action.

We were able to restrict nuclear arms and ban chemical weapons with good success. Why some international treaties have been successful? Some noteworthy and AI-relevant case studies to dig deeper include:

Biological Weapons Convention (1972). First multilateral disarmament treaty banning an entire weapon category, showing an exceptional international consensus in restricting dangerous technologies.

Chemical Weapons Convention (1993). A good example of a comprehensive approach with verification mechanisms and destruction protocols for existing stockpiles of dangerous substance.

Antarctic Treaty System (1959). Prevented militarization of an entire continent and established scientific cooperation, demonstrating how technology use can be channeled toward peaceful purposes.

Outer Space Treaty (1967). Prevented nuclear weapons in space and established space as domain for peaceful use, showing proactive governance before full technological exploitation.

As a caveat, many experts are pessimistic about the realistic options of binding international AI treaties. For example, the best forecasters of the field, Kokotajlo and his team, do not predict such treaty to happen in the coming years,7 mostly due to the speed of development and included conflict dynamic. A good example of the arguments against such deals are included in this excellent post of Anton Leicht. The usefulness of conducting research on this topic should be adjusted accordingly.8

Conclusion

Like all major technological innovations of our civilization, the dawn of advanced artificial intelligence brings with it huge societal changes. As similar major changes have happened before, a deep study into the reactive patterns to emergent technology would help us navigate towards more sustainable governance solutions with AI.

I have presented in this text four themes that each warrant a study of their own. While I have outlined the main arguments about the relevance of these perspectives to AI’s transformational nature, it is a mere outline, and further study should elaborate on both the argumentation and historical examples. Additionally, there exists numerous historical and social studies that are at least partially relevant to this analysis, which where not within the scope and purpose of this text but should be assimilated into the research.

The AI governance research in the last couple of years has focused - with good results - in transformational technologies. Additionally, the early atomic age and nuclear disarmament agreements have been a major parallel for some governance researchers, and are likely exhausted as beneficial sources for more studies of this kind.

See for example Neel Nanda in this blog post.

As many technologies, including artificial intelligence, are dual-use by their nature, the risk of misuse is arguably the biggest existing risks. We’ve had many authoritarian regimes and dictators taking physical control over nations; they have needed to keep people alive for utilitarian purposes, but otherwise we’ve had constantly systems that end up unaligned. A detailed research into the historical examples of earlier beneficial technologies used malevolently despite the initial optimism also has potential, especially if it would be connected to the study of totalitarianism, fanaticism and dark triad trait prevalence in major leaders. Another adjacent and important topic would be historical mitigation strategies to prevent power concentration. These three combined seem to be relevant to our current situation, but I have omitted them from this text because their research question is a bit harder to limit.

Developed by A.F.K. Organski in the 1950s and later expanded by scholars like Jacek Kugler, Douglas Lemke, and others.

https://www.nationalsecurity.ai/. Relevant to defense-offense- theory, page 2 of the Standard Version: “Defense often lags behind offense in both biology and critical infrastructure, leaving large swaths of civilization vulnerable. The relative security we enjoyed when only nation-states were capable of sophisticated attacks will no longer hold if highly capable AIs can guide extremist cells from plan to execution.”

Social contract is a theoretical framework by philosopher Thomas Hobbes. According to Hobbes, the social contract is an agreement among individuals to surrender some of their natural freedoms to an authority (sovereign or state) in exchange for protection and social order. Without such a contract, Hobbes argued, humans would live in a "state of nature" characterized by constant war and fear, making life "solitary, poor, nasty, brutish, and short."

https://ai-2027.com/

There is, though, an argument about the historical precedence on the worst outcomes of dual-use technology. As Hendrycks et al present in their Superintelligence Strategy, page 10: “For decades, states that sparred on nearly every front nonetheless found common cause in denying catastrophic dual-use technologies to rogue actors. The Non-Proliferation Treaty, the Biological Weapons Convention, and the Chemical Weapons Convention drew the U.S., the Soviet Union, and China into an unlikely partnership, driven not by altruism but by self-preservation. None could confidently manage every threat alone, and all understood that accidental proliferation would imperil them equally.”